IntellIoT

Intelligent, distributed, human-centered and trustworthy IoT environments

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: IntellIoT

- Πρόγραμμα: Horizon 2020 (H2020-ICT-2018-20)

- Προϋπολογισμός: 426250€ (Συνολικός 7997615 €)

- Ημερομηνία Έναρξης: 1η Οκτωβρίου 2020

- Διάρκεια: 36 μήνες

- Website(s): intelliot.eu, CORDIS

Πληροφορίες

A new framework for human-defined autonomy

The Internet of Things (IoT) merges physical and virtual worlds. The European Commission is actively promoting the IoT as a next step towards the digitisation of our society and economy. The EU-funded IntellIoT project will develop a framework for intelligent IoT environments that execute semi-autonomous IoT applications, enabling a suite of novel use cases in which a human expert plays a key role in controlling and teaching the AI-enabled systems. Specifically, the project will focus on agriculture (tractors semi-autonomously operated in conjunction with drones), healthcare (patients monitored by sensors) and manufacturing (automated plants shared by multiple tenants who utilise machinery from third-party vendors). It will establish human-defined autonomy through distributed AI running on intelligent IoT devices.

The traditional cloud centric IoT has clear limitations, e.g. unreliable connectivity, privacy concerns, or high round-trip times. IntellIoT overcomes these challenges in order to enable NG IoT applications. IntellIoT’s objectives aim at developing a framework for intelligent IoT environments that execute semi-autonomous IoT applications, which evolve by keeping the human-in-the-loop as an integral part of the system.

Such intelligent IoT environments enable a suite of novel use cases. IntellIoT focuses on: Agriculture, where a tractor is semi-autonomously operated in conjunction with drones. Healthcare, where patients are monitored by sensors to receive advice and interventions from virtual advisors. Manufacturing, where highly automated plants are shared by multiple tenants who utilize machinery from third-party vendors. In all cases a human expert plays a key role in controlling and teaching the AI-enabled systems.

The following 3 key features of IntellIoT’s approach are highly relevant for the work programme as they address the call’s challenges:

- Human-defined autonomy is established through distributed AI running on intelligent IoT devices under resource-constraints, while users teach and refine the AI via tactile interaction (with AR/VR).

- De-centralised, semi-autonomous IoT applications are enabled by self-aware agents of a hypermedia-based multi-agent system, defining a novel architecture for the NG IoT. It copes with interoperability by relying on W3C WoT standards and enabling automatic resolution of incompatibility constraints.

- An efficient, reliable computation & communication infrastructure is powered by 5G and dynamically manages and optimizes the usage of network and compute resources in a closed loop. Integrated security assurance mechanisms provide trust and DLTs are made accessible under resource constraints to enable smart contracts and show transparency of performed actions.

- SIEMENS AKTIENGESELLSCHAFT – Germany (Coordinator)

- EURECOM – France

- AALBORG UNIVERSITET – Denmark

- OULUN YLIOPISTO – Finland

- TTCONTROL GMBH – Austria

- TTTECH COMPUTERTECHNIK AG – Austria

- Telecommunication Systems Institute – Greece

- PHILIPS ELECTRONICS NEDERLAND BV – Netherlands

- SPHYNX ANALYTICS LIMITED – Cyprus

- UNIVERSITAET ST. GALLEN – Switzerland

- HOLO-INDUSTRIE 4.0 SOFTWARE GMBH – Germany

- AVL COMMERCIAL DRIVELINE & TRACTOR ENGINEERING GMBH – Austria

- STARTUP COLORS UG – Germany

- PANEPISTIMIAKO GENIKO NOSOKOMEIO IRAKLEIOU – Greece

Σχετικά Έργα

QualiMaster

PORTDIAL

Partensor

ORAMA

Platoons

OPTIMA

NOPTILUS

LEADS

IntellIoT

HERMES

Green.Dat.AI

CYRENE

Πληροφορίες

Managing supply chain (SC) activities today is increasingly complex. One reason is the lack of an integrated means for security officers and operators to protect their interconnected critical infrastructures and cyber systems in the new digital era. The EU-funded CYRENE project aims to enhance the security, privacy, resilience, accountability and trustworthiness of supply chains, through the provision of a novel and dynamic Conformity Assessment Process (CAP) that evaluates the security and resilience of SC services. The CAP also assesses the interconnected IT infrastructures composing these services, and the individual devices that support the operations of the SCs. A new collaborative, multilevel, evidence-driven risk-and-privacy assessment approach will be validated in the scope of realistic scenarios/conditions comprising real-life SC infrastructures and end-users.

Despite the tremendous socio-economic importance of Supply Chains (SCs), security officers and operators have still no easy and integrated way to protect their interconnected Critical Infrastructures (CIs) and cyber systems in the new digital era. CYRENE vision is to enhance the security, privacy, resilience, accountability and trustworthiness of SCs through the provision of a novel and dynamic Conformity Assessment Process (CAP) that evaluates the security and resilience of supply chain services, the interconnected IT infrastructures composing these services, and the individual devices that support the operations of the SCs. In order to meet its objectives, the proposed CAP is based on a collaborative, multi-level evidence-driven, Risk and Privacy Assessment approach that support, at different levels, the SCs security officers and operators to recognize, identify, model, and dynamically analyse advanced persistent threats and vulnerabilities as well as to handle daily cyber-security and privacy risks and data breaches.

CYRENE will be validated in the scope of realistic scenarios/conditions comprising of real-life supply chain infrastructures and end-users. Furthermore, the project will ensure the active engagement of a large number of external stakeholders as a means of developing a wider ecosystem around the project’s results, which will set the basis for CYRENE large scale adoption and global impact.

- MAGGIOLI SPA – Italy

- CENTRO RICERCHE FIAT SCPA – Italy

- FUNDACION DE LA COMUNIDAD VALENCIANA PARA LA INVESTIGACION, PROMOCION Y ESTUDIOS COMERCIALES DE VALENCIAPORT – Spain

- STOCKHOLMS UNIVERSITET – Sweden

- Telecommunication Systems Institute – Greece

- University of Novi Sad Faculty of Sciences – Serbia

- FOCAL POINT – Belgium

- PRIVANOVA SAS – France

- HYPERBOREA SRL – Italy

- CYBERLENS BV – Netherlands

- ZELUS IKE – Greece

- SPHYNX TECHNOLOGY SOLUTIONS AG – Switzerland

- IOTAM INTERNET OF THINGS APPLICATIONS AND MULTI LAYER DEVELOPMENT LTD – Cyprus

- UBITECH LIMITED – Cyprus

Other Projects

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

VARCITIES

Visionary Nature Based Actions for Heath, Wellbeing & Resilience in Cities

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: VARCITIES

- Πρόγραμμα: Horizon 2020 (H2020-EU.3.5.2.)

- Προϋπολογισμός: 713750 € (Overall: 11129569.50 €)

- Ημερομηνία Έναρξης: 1st September 2020

- Διάρκεια: 53 months

- Website(s): www.varcities.eu, CORDIS

Πληροφορίες

In an increasingly urbanised world, governments are focussing on boosting cities’ productivity and improving citizens’ living conditions and quality of life. Despite efforts to transform the challenges facing cities into opportunities, problems such as overburdened social services and health facilities, air pollution and exacerbated heat create a bleak outlook. With these challenges in mind, the EU-funded VARCITIES project aims to create a vision for future cities with the citizen and the so-called human community at the centre. It will therefore implement innovative ideas and add value by creating sustainable models for improving the health and well-being of citizens facing diverse climatic conditions and challenges around Europe. This will be achieved through shared public spaces that make cities liveable and welcoming.

In an increasingly urbanising world, governments and international corporations strive to increase productivity of cities, recognized as economy growth hubs, as well as ensuring better quality of life and living conditions to citizens. Although significant effort is performed by international organisations, researchers, etc. to transform the challenges of Cities into opportunities, the visions of our urban future are trending towards bleak. Social services and health facilities are significantly affected in negative ways owed to the increase in urban populations (70% by 2050).

Air pollution and urban exacerbation of heat islands is exacerbating. Nature will struggle to compensate in the future City, as rural land is predicted to shrink by 30% affecting liveability. VARCITIES puts the citizen and the “human community” in the eye of the future cities’ vision. Future cities should evolve to be human centred cities. The vision of VARCITIES is to implement real, visionary ideas and add value by establishing sustainable models for increasing H&WB of citizens (children, young people, middle age, elderly) that are exposed to diverse climatic conditions and challenges around Europe (e.g. from harsh winters in Skelleftea-SE to hot summers in Chania-GR, from deprived areas in Novo mesto-SI to increased pollution in Malta) through shared public spaces that make cities liveable and welcoming.

- Telecommunication Systems Institute – Greece

- CYCLOPOLIS SISTIMATA KOINOXRISTON PODILATON IDIOTIKI KEFALEOUXIKI ETERIA – Greece

- DIMOS CHANIA – Greece

- CCADEMIA EUROPEA DI BOLZANO – Italy

-

UNIVERSITA DEGLI STUDI DI PADOVA – Italy

- UNISMART – FONDAZIONE UNIVERSITA DEGLI STUDI DI PADOVA – Italy

- COMUNE DI CASTELFRANCO VENETO – Italy

- UNIVERSITA TA MALTA – Malta

- DARTTEK LTD – Malta

- KORONA INZENIRING DD – Slovenia

- RAZVOJNI CENTER NOVO MESTO SVETOVANJE IN RAZVOJ DOO – Slovenia

- MESTNA OBCINA NOVO MESTO – Slovenia

- VAN ROMPAEY SARA – Belgium

- STAD LEUVEN – Belgium

- IES R&D – Ireland

- LOUTH COUNTY COUNCIL – Ireland

- SKELLEFTEA KOMMUN – Sweden

- PROSPEX INSTITUTE – Belgium

- CROWDHELIX LIMITED – Ireland

- INLECOM INNOVATION ASTIKI MI KERDOSKOPIKI ETAIREIA – Greece

-

STICHTING ISOCARP INSTITUTE CENTER OF URBAN EXCELLENCE – Netherlands

-

UNIVERSITETET I BERGEN – Norway

- BERGEN KOMMUNE – Norway

- DECCA TECHNOLOGY AS – Norway

- SENSEDGE RAZVOJ INOVATIVNIH RESITEVDOO – Slovenia

Other Projects

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

BLEeper

A low-cost outdoor location tracking solution for shoreline safety.

Πληροφορίες

Το TETRAMAX είναι μια δράση καινοτομίας του προγράμματος Horizon 2020 στο πλαίσιο της πρωτοβουλίας για το ευρωπαϊκό Smart Anything Everywhere στον τομέα της εξατομικευμένης και χαμηλής κατανάλωσης ενέργειας υπολογιστών για τα Cyber Physical Systems και το Διαδίκτυο των πραγμάτων. Ως Κέντρο ψηφιακής καινοτομίας, το TETRAMAX στοχεύει να φέρει προστιθέμενη αξία στην ευρωπαϊκή βιομηχανία, συμβάλλοντας στην επίτευξη ανταγωνιστικού πλεονεκτήματος μέσω της ταχύτερης ψηφιοποίησης. Το TETRAMAX ξεκίνησε το Σεπτέμβριο του 2017 και διαρκεί μέχρι τον Αύγουστο του 2021.

Το έργο BLEEPER αφορά στην ενίσχυση της ασφάλειας στις παραλίες. Χρησιμοποιεί χαμηλού κόστους φωτοβολταϊκούς αδιάβροχους φάρους Bluetooth σε γεωγραφικά διαμορφωμένες ακτογραμμές, προκειμένου να παρέχει εντοπισμό θέσης υψηλής ακρίβειας για όλα τα άτομα που είναι εξοπλισμένα με κάποιο είδος χαμηλού κόστους φορητό εξοπλισμό Bluetooth (π.χ. σανδάλια, wristbands, life-vests). Το BLEeper θα προσφέρει παράκτια επιτήρηση ανώτερη από αυτή των άλλων διαθέσιμων λύσεων, οδηγώντας σε σημαντικά αυξημένα επίπεδα ασφάλειας για τις δραστηριότητες στις ακτογραμμές.

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

EDRA

Hardware-Assisted Decoupled Access Execution on the Digital Market: The EDRA Framework

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: EDRA

- Πρόγραμμα: H2020 (H2020-EU.1.2.1.)

- Προϋπολογισμός: 100000 € (Συνολικός: 100000 €)

- Ημερομηνία Έναρξης: 1η Μαΐου 2019

- Διάρκεια: 18 μήνες

- Website(s): CORDIS

Πληροφορίες

High performance computing framework in the cloud

Developing the next generation of high-performance computing (HPC) technologies, applications and systems towards exascale is a priority for the EU. The ‘Decoupled Access – Execute’ (DAE) approach is an HPC framework developed by the EU-funded project EXTRA for mapping applications to reconfigurable hardware. The EU-funded EDRA project will deploy virtual machines that integrate the EXTRA DAE architecture onto custom hardware within the cloud infrastructure of the Amazon Web Services marketplace, one of the largest cloud service providers. The project will focus on the commercialisation of EDRA on Amazon’s web service marketplace to ensure the EDRA framework is widely available and versatile for wide-ranging applications.

The FET project “EXTRA” (Exploiting eXascale Technology with Reconfigurable Architectures) aimed at devising efficient ways to deploy ultra-efficient heterogeneous compute nodes for future exascale High Performance Computing (HPC) applications. One major outcome was the development of a framework for mapping applications to reconfigurable hardware, relying on the concept of Decoupled Access – Execute (DAE) approach.

This project focuses on the commercialization of the EXTRA framework for cloud HPC platforms. More specifically, it targets the deployment of Virtual Machines (VMs) that integrate the EXTRA DAE Reconfigurable Architecture (EDRA) on custom hardware within the cloud infrastructure of one of the largest cloud service providers, the Amazon Web Services marketplace. End-users will be able to automatically map their applications to “EDRA-enhanced” VMs, and directly deploy them onto Amazon’s cloud infrastructure for optimal performance and minimal cost.

Towards a successful exploitation outcome, the project will put effort on (a) applying the required software- and hardware-level modifications on the current EXTRA framework to comply with Amazon’s infrastructure, (b) devising a business strategy to effectively address various user groups, and (c) disseminating the benefits of this solution to large public events and summits.

- Telecommunication Systems Institute – Greece

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

ECOSCALE

Energy-efficient Heterogeneous COmputing at exaSCALE

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: ECOSCALE

- Πρόγραμμα: Horizon 2020 (H2020-EU.1.2.2.)

- Προϋπολογισμός: 688 625.01 € (Συνολικός: 4 237 397,50 €)

- Ημερομηνία Έναρξης: 1η Οκτωβρίου 2015

- Διάρκεια: 46 μήνες

- Website(s): CORDIS

Πληροφορίες

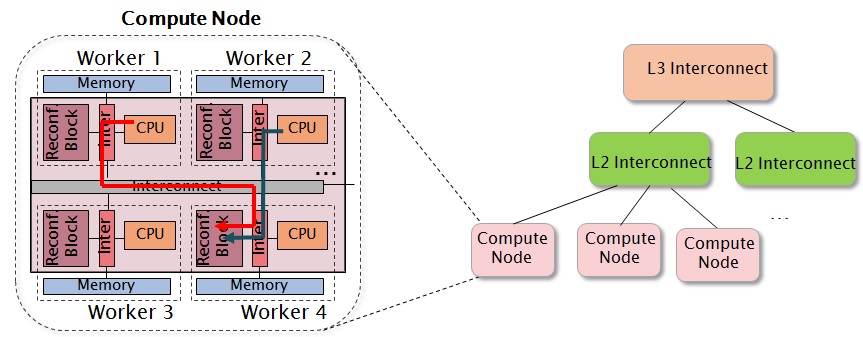

In order to sustain the ever-increasing demand of storing, transferring and mainly processing data, HPC servers need to improve their capabilities. Scaling in number of cores alone is not a feasible solution any more due to the increasing utility costs and power consumption limitations. While current HPC systems can offer petaflop performance, their architecture limits their capabilities in terms of scalability and energy consumption. Extrapolating from the top HPC systems, such as China’s Tianhe-2 Supercomputer, we would require an enormous 1GW power to sustain exaflop performance while a similar yet smaller number is triggered even if we take the best system of the Green 500 list as an initial reference.

Apart from improving transistor and integration technology, what is needed is to refine the HPC application development and the HPC architecture design. Towards this end, ECOSCALE will analyse the characteristics and trends of current and future applications in order to provide a hybrid MPI+OpenCL programming environment, a hierarchical architecture, runtime system and middleware, and a shared distributed reconfigurable hardware based acceleration.

Publications

Peer reviews Articles

- Acceleration by Inline Cache for Memory-Intensive Algorithms on FPGA via High-Level SynthesisAuthor(s): Liang Ma, Luciano Lavagno, Mihai Teodor Lazarescu, Arslan Arif

Published in: IEEE Access, Issue 5, 2017, Page(s) 18953-18974, ISSN 2169-3536

DOI: 10.1109/ACCESS.2017.2750923 - Efficient FPGA Implementation of OpenCL High-Performance Computing Applications via High-Level SynthesisAuthor(s): Fahad Bin Muslim, Liang Ma, Mehdi Roozmeh, Luciano Lavagno

Published in: IEEE Access, Issue 5, 2017, Page(s) 2747-2762, ISSN 2169-3536

DOI: 10.1109/ACCESS.2017.2671881 - Performance and energy-efficient implementation of a smart city application on FPGAsAuthor(s): Arslan Arif, Felipe A. Barrigon, Francesco Gregoretti, Javed Iqbal, Luciano Lavagno, Mihai Teodor Lazarescu, Liang Ma, Manuel Palomino, Javier L. L. Segura

Published in: Journal of Real-Time Image Processing, 2018, ISSN 1861-8200

DOI: 10.1007/s11554-018-0792-x - DDRNoCAuthor(s): Ahsen Ejaz, Vassilios Papaefstathiou, Ioannis Sourdis

Published in: ACM Transactions on Architecture and Code Optimization, Issue 15/2, 2018, Page(s) 1-24, ISSN 1544-3566

DOI: 10.1145/3200201 - Decoupled Fused CacheAuthor(s): Evangelos Vasilakis, Vassilis Papaefstathiou, Pedro Trancoso, Ioannis Sourdis

Published in: ACM Transactions on Architecture and Code Optimization, Issue 15/4, 2019, Page(s) 1-23, ISSN 1544-3566

DOI: 10.1145/3293447

Conference proceedings

- A Scalable Runtime for the ECOSCALE Heterogeneous Exascale Hardware PlatformAuthor(s): Paul Harvey, Konstantin Bakanov, Ivor Spence, Dimitrios S. Nikolopoulos

Published in: Proceedings of the 6th International Workshop on Runtime and Operating Systems for Supercomputers – ROSS ’16, 2016, Page(s) 1-8

DOI: 10.1145/2931088.2931090 - ECOSCALE: Reconfigurable Computing and Runtime System for Future Exascale SystemsAuthor(s): Mavroidis, Iakovos; Papaefstathiou, Ioannis; Lavagno, Luciano; Nikolopoulos, Dimitrios; Koch, Dirk; Goodacre, John; Sourdis, Ioannis; Papaefstathiou, Vassilis; Coppola, Marcello; Palomino, Manuel

Published in: Design, Automation & Test in Europe (DATE) 2016, 2016

DOI: 10.5281/zenodo.34893 - Energy-efficient FPGA Implementation of the k-Nearest Neighbors Algorithm Using OpenCLAuthor(s): Fahad Muslim, Alexandros Demian, Liang Ma, Luciano Lavagno, Affaq Qamar

Published in: Position Papers of the 2016 Federated Conference on Computer Science and Information Systems, 2016, Page(s) 141-145

DOI: 10.15439/2016F327 - Implementation of a performance optimized database join operation on FPGA-GPU platforms using OpenCLAuthor(s): Mehdi Roozmeh, Luciano Lavagno

Published in: 2017 IEEE Nordic Circuits and Systems Conference (NORCAS): NORCHIP and International Symposium of System-on-Chip (SoC), 2017, Page(s) 1-6

DOI: 10.1109/NORCHIP.2017.8124981 - High Performance and Low Power Monte Carlo Methods to Option Pricing Models via High Level Design and SynthesisAuthor(s): Liang Ma, Fahad Bin Muslim, Luciano Lavagno

Published in: 2016 European Modelling Symposium (EMS), 2016, Page(s) 157-162

DOI: 10.1109/EMS.2016.036 - Exact and Heuristic Allocation of Multi-kernel Applications to Multi-FPGA PlatformsAuthor(s): Junnan Shan, Mario R. Casu, Jordi Cortadella, Luciano Lavagno, Mihai T. Lazarescu

Published in: Proceedings of the 56th Annual Design Automation Conference 2019 on – DAC ’19, 2019, Page(s) 1-6

DOI: 10.1145/3316781.3317821 - FusionCache: Using LLC tags for DRAM cacheAuthor(s): Evangelos Vasilakis, Vassilis Papaefstathiou, Pedro Trancoso, Ioannis Sourdis

Published in: 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), 2018, Page(s) 593-596

DOI: 10.23919/date.2018.8342077 - A Survey on FPGA VirtualizationAuthor(s): Anuj Vaishnav, Khoa Dang Pham and Dirk Koch

Published in: 28th International Conference on Field Programmable Logic and Application (FPL), 2018 - Resource Elastic Virtualization for FPGAs Using OpenCLAuthor(s): Anuj Vaishnav, Khoa Dang Pham, Dirk Koch, James Garside

Published in: 2018 28th International Conference on Field Programmable Logic and Applications (FPL), 2018, Page(s) 111-1117

DOI: 10.1109/fpl.2018.00028 - ZUCL: A ZYNQ UltraScale+ Framework for OpenCL HLS ApplicationsAuthor(s): Khoa Pham, Anuj Vaishnav, Malte Vesper, Dirk Koch

Published in: FSP Workshop 2018; Fifth International Workshop on FPGAs for Software Programmers, Issue 31-31 Aug. 2018, 2018 - Live Migration for OpenCL FPGA AcceleratorsAuthor(s): Anuj Vaishnav, Khoa Pham, Dirk Koch

Published in: 2018 International Conference on Field-Programmable Technology (FPT), 2018, Page(s) 38-45

DOI: 10.1109/fpt.2018.00017 - IPRDF: An Isolated Partial Reconfiguration Design Flow for Xilinx FPGAsAuthor(s): Khoa Pham, Edson Horta, Dirk Koch, Anuj Vaishnav, Thomas Kuhn

Published in: 2018 IEEE 12th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), 2018, Page(s) 36-43

DOI: 10.1109/mcsoc2018.2018.00018 - FreewayNoC: A DDR NoC with Pipeline BypassingAuthor(s): Ahsen Ejaz, Vassilios Papaefstathiou, Ioannis Sourdis

Published in: 2018 Twelfth IEEE/ACM International Symposium on Networks-on-Chip (NOCS), 2018, Page(s) 1-8

DOI: 10.1109/nocs.2018.8512160 - EFCAD — An Embedded FPGA CAD Tool Flow for Enabling On-chip Self-CompilationAuthor(s): Khoa Dang Pham, Malte Vesper, Dirk Koch, Eddie Hung

Published in: 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), 2019, Page(s) 5-8

DOI: 10.1109/fccm.2019.00011 - Heterogeneous Resource-Elastic Scheduling for CPU+FPGA ArchitecturesAuthor(s): Anuj Vaishnav, Khoa Dang Pham, Dirk Koch

Published in: Proceedings of the 10th International Symposium on Highly-Efficient Accelerators and Reconfigurable Technologies – HEART 2019, 2019, Page(s) 1-6

DOI: 10.1145/3337801.3337819 - Scalable Filtering Modules for Database Acceleration on FPGAsAuthor(s): Kristiyan Manev, Anuj Vaishnav, Charalampos Kritikakis, Dirk Koch

Published in: Proceedings of the 10th International Symposium on Highly-Efficient Accelerators and Reconfigurable Technologies – HEART 2019, 2019, Page(s) 1-6

DOI: 10.1145/3337801.3337810 - LLC-Guided Data Migration in Hybrid Memory SystemsAuthor(s): Evangelos Vasilakis, Vassilis Papaefstathiou, Pedro Trancoso, Ioannis Sourdis

Published in: 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS), 2019, Page(s) 932-942

DOI: 10.1109/ipdps.2019.00101 - End-to-end Dynamic Stream Processing on Maxeler HLS PlatformsAuthor(s): Charalampos Kritikakis, Dirk Koch

Published in: 2019 IEEE 30th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2019, Page(s) 59-66

DOI: 10.1109/asap.2019.00-29 - BITMAN: A tool and API for FPGA bitstream manipulationsAuthor(s): Khoa Dang Pham, Edson Horta, Dirk Koch

Published in: Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, 2017, Page(s) 894-897

DOI: 10.23919/date.2017.7927114 - Accelerating Linux Bash Commands on FPGAs Using Partial ReconfigurationAuthor(s): Edson Horta, Xinzi Shen, Khoa Pham, Dirk Koch

Published in: FPGAs for Software Programmers (FSP), 2017, 2017

Book chapters

- HLS Algorithmic Explorations for HPC Execution on Reconfigurable Hardware – ECOSCALEAuthor(s): Pavlos Malakonakis, Konstantinos Georgopoulos, Aggelos Ioannou, Luciano Lavagno, Ioannis Papaefstathiou, Iakovos Mavroidis

Published in: Applied Reconfigurable Computing. Architectures, Tools, and Applications – 14th International Symposium, ARC 2018, Santorini, Greece, May 2-4, 2018, Proceedings, Issue 10824, 2018, Page(s) 724-736

DOI: 10.1007/978-3-319-78890-6_58 - HLS Enabled Partially Reconfigurable Module ImplementationAuthor(s): Nicolae Bogdan Grigore, Charalampos Kritikakis, Dirk Koch

Published in: Architecture of Computing Systems – ARCS 2018, Issue 10793, 2018, Page(s) 269-282

DOI: 10.1007/978-3-319-77610-1_20 - Energy-Efficient Heterogeneous Computing at exaSCALE—ECOSCALEAuthor(s): Konstantinos Georgopoulos, Iakovos Mavroidis, Luciano Lavagno, Ioannis Papaefstathiou, Konstantin Bakanov

Published in: Hardware Accelerators in Data Centers, 2019, Page(s) 199-213

DOI: 10.1007/978-3-319-92792-3_11 - A Novel Framework for Utilising Multi-FPGAs in HPC SystemsAuthor(s): K. Georgopoulos, K. Bakanov, I. Mavroidis, I. Papaefstathiou, A. Ioannou, P. Malakonakis, K. Pham, D. Koch, L. Lavagno

Published in: Heterogeneous Computing Architectures – Challenges and Vision, 2019, Page(s) 153-189

DOI: 10.1201/9780429399602-7

- Telecommunication Systems Institute – Greece

- THE QUEEN’S UNIVERSITY OF BELFAS – United Kingdom

- STMICROELECTRONICS GRENOBLE 2 SAS – France

- ACCIONA CONSTRUCCION SA – Spain

- THE UNIVERSITY OF MANCHESTER – United Kingdom

- POLITECNICO DI TORINO – Italy

- CHALMERS TEKNISKA HOEGSKOLA AB – Sweden

- SYNELIXIS LYSEIS PLIROFORIKIS AUTOMATISMOU & TILEPIKOINONION ANONIMI ETAIRIA – Greece

- IDRYMA TECHNOLOGIAS KAI EREVNAS – Greece

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

EXTRA

Exploiting eXascale Technology with Reconfigurable Architectures

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: EXTRA

- Πρόγραμμα: Horizon 2020 (H2020-EU.1.2.2.)

- Προϋπολογισμός: 420000 € (Συνολικός: 3989931,25 €)

- Ημερομηνία Έναρξης: 1η Σεπτεμβρίου 2015

- Διάρκεια: 36 μήνες

- Website(s): www.extrahpc.eu, CORDIS

Περισσότερες Πληροφορίες

To handle the stringent performance requirements of future exascale High Performance Computing (HPC) applications, HPC systems need ultra-efficient heterogeneous compute nodes. To reduce power and increase performance, such compute nodes will require reconfiguration as an intrinsic feature, so that specific HPC application features can be optimally accelerated at all times, even if they regularly change over time.

In the EXTRA project, we create a new and flexible exploration platform for developing reconfigurable architectures, design tools and HPC applications with run-time reconfiguration built-in from the start. The idea is to enable the efficient co-design and joint optimization of architecture, tools, applications, and reconfiguration technology in order to prepare for the necessary HPC hardware nodes of the future.

The project EXTRA covers the complete chain from architecture up to the application:

- More coarse-grain reconfigurable architectures that allow reconfiguration on higher functionality levels and therefore provide much faster reconfiguration than at the bit level.

- The development of just-in time synthesis tools that are optimized for fast (but still efficient) re-synthesis of application phases to new, specialized implementations through reconfiguration.

- The optimization of applications that maximally exploit reconfiguration.

- Suggestions for improvements to reconfigurable technologies to enable the proposed reconfiguration of the architectures.

In conclusion, EXTRA focuses on the fundamental building blocks for run-time reconfigurable exascale HPC systems: new reconfigurable architectures with very low reconfiguration overhead, new tools that truly take reconfiguration as a design concept, and applications that are tuned to maximally exploit run-time reconfiguration techniques.

Our goal is to provide the European platform for run-time reconfiguration to maintain Europe’s competitive edge and leadership in run-time reconfigurable computing.

Publications

Conference proceedings (65)

- Optimizing streaming stencil time-step designs via FPGA floorplanningAuthor(s): Marco Rabozzi, Giuseppe Natale, Biagio Festa, Antonio Miele, Marco D. Santambrogio

Published in: 2017 27th International Conference on Field Programmable Logic and Applications (FPL), 2017, Page(s) 1-4

DOI: 10.23919/FPL.2017.8056764 - FPGA-based PairHMM Forward Algorithm for DNA Variant CallingAuthor(s): Davide Sampietro, Chiara Crippa, Lorenzo Di Tucci, Emanuele Del Sozzo, Marco D. Santambrogio

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445119 - Heterogeneous exascale supercomputing: The role of CAD in the exaFPGA projectAuthor(s): M. Rabozzi, G. Natale, E. Del Sozzo, A. Scolari, L. Stornaiuolo, M. D. Santambrogio

Published in: Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, 2017, Page(s) 410-415

DOI: 10.23919/DATE.2017.7927025 - CGRA Tool Flow for Fast Run-Time ReconfigurationAuthor(s): Florian Fricke, André Werner, Keyvan Shahin, Michael Huebner

Published in: Proc. of the 14th International Symposium on Reconfigurable Computing: Architectures, Tools, and Applications (ARC), 2018, Page(s) 661-672

DOI: 10.1007/978-3-319-78890-6_53 - Superimposed in-circuit debugging for self-healing FPGA overlaysAuthor(s): Alexandra Kourfali, Dirk Stroobandt

Published in: 2018 IEEE 19th Latin-American Test Symposium (LATS), 2018, Page(s) 1-6

DOI: 10.1109/LATW.2018.8349688 - Tool flow for automatic generation of architectures and test-cases to enable the evaluation of CGRAs in the context of HPC applicationsAuthor(s): Florian Fricke, Andre Werner, Michael Hubner

Published in: 2017 Conference on Design and Architectures for Signal and Image Processing (DASIP), 2017, Page(s) 1-2

DOI: 10.1109/DASIP.2017.8122124 - Hardware Compilation of Deep Neural Networks: An OverviewAuthor(s): Ruizhe Zhao, Shuanglong Liu, Ho-Cheung Ng, Erwei Wang, James J. Davis, Xinyu Niu, Xiwei Wang, Huifeng Shi, George A. Constantinides, Peter Y. K. Cheung, Wayne Luk

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445088 - SICTA: A superimposed in-circuit fault tolerant architecture for SRAM-based FPGAsAuthor(s): Alexandra Kourfali, Amit Kulkarni, Dirk Stroobandt

Published in: 2017 IEEE 23rd International Symposium on On-Line Testing and Robust System Design (IOLTS), 2017, Page(s) 5-8

DOI: 10.1109/IOLTS.2017.8046168 - ADAM – Automated Design Analysis and Merging for Speeding up FPGA DevelopmentAuthor(s): Ho-Cheung Ng, Shuanglong Liu, Wayne Luk

Published in: Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays – FPGA ’18, 2018, Page(s) 189-198

DOI: 10.1145/3174243.3174247 - Hierarchical force-based block spreading for analytical FPGA placementAuthor(s): Dries Vercruyce, Elias Vansteenkiste, Dirk Stroobandt

Published in: FPL 2018, 2018 - The Role of CAD Frameworks in Heterogeneous FPGA-Based Cloud SystemsAuthor(s): Lorenzo Di Tucci, Marco Rabozzi, Luca Stornaiuolo, Marco D. Santambrogio

Published in: 2017 IEEE International Conference on Computer Design (ICCD), 2017, Page(s) 423-426

DOI: 10.1109/ICCD.2017.74 - CRRS: Custom Regression and Regularisation Solver for Large-scale Linear Systems,Author(s): A.-I. Cross, L. Guo, W. Luk and M. Salmon

Published in: International Conference on Field-Programmable Logic and Applications, 2018 - A NoC-based custom FPGA configuration memory architecture for ultra-fast micro-reconfigurationAuthor(s): Amit Kulkarni, Poona Bahrebar, Dirk Stroobandt, Giulio Stramondo, Catalin Bogdan Ciobanu, Ana Lucia Varbanescu

Published in: 2017 International Conference on Field Programmable Technology (ICFPT), 2017, Page(s) 203-206

DOI: 10.1109/FPT.2017.8280141 - An open reconfigurable research platform as stepping stone to exascale high-performance computingAuthor(s): Dirk Stroobandt, Catalin Bogdan Ciobanu, Marco D. Santambrogio, Gabriel Figueiredo, Andreas Brokalakis, Dionisios Pnevmatikatos, Michael Huebner, Tobias Becker, Alex J. W. Thom

Published in: Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, 2017, Page(s) 416-421

DOI: 10.23919/DATE.2017.7927026 - Towards Application-Centric Parallel MemoriesAuthor(s): Giulio Stramondo, Catalin Bogdan Ciobanu, Ana Lucia Varbanescu and Cees De Laat

Published in: EuroPar Workshops 2018 — HeteroPar’18, 2018 - From Tensor Algebra to Hardware Accelerators: Generating Streaming Architectures for Solving Partial Differential EquationsAuthor(s): Francis P. Russell, James Stanley Targett, Wayne Luk

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445093 - A Parallel, Energy Efficient Hardware Architecture for the merAligner on FPGA Using Chisel HCLAuthor(s): Lorenzo Di Tucci, Davide Conficconi, Alessandro Comodi, Steven Hofmeyr, David Donofrio, Marco D. Santambrogio

Published in: 2018 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2018, Page(s) 214-217

DOI: 10.1109/IPDPSW.2018.00041 - In-Circuit FPGA Debugging using Parameterised ReconfigurationsAuthor(s): Alexandra Kourfali and Dirk Stroobandt

Published in: 54th ACM/ESDA/IEEE Design Automation Conference (DAC), 2017 - Superimposed In-Circuit Fault Mitigation for Dynamically Reconfigurable FPGAsAuthor(s): Alexandra Kourfali, David Merodio Codinachs and Dirk Stroobandt

Published in: IEEE Conference on Radiation Effects on Components and Systems (RADECS), 2017 - An FPGA-Based Acceleration Methodology and Performance Model for Iterative StencilsAuthor(s): Enrico Reggiani, Giuseppe Natale, Carlo Moroni, Marco D. Santambrogio

Published in: 2018 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2018, Page(s) 115-122

DOI: 10.1109/IPDPSW.2018.00026 - Towards Hardware Accelerated Reinforcement Learning for Application-Specific Robotic ControlAuthor(s): Shengjia Shao, Jason Tsai, Michal Mysior, Wayne Luk, Thomas Chau, Alexander Warren, Ben Jeppesen

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445099 - From exaflop to exaflowAuthor(s): Tobias Becker, Pavel Burovskiy, Anna Maria Nestorov, Hristina Palikareva, Enrico Reggiani, Georgi Gaydadjiev

Published in: Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, 2017, Page(s) 404-409

DOI: 10.23919/DATE.2017.7927024 - MAX-PolyMem: High-Bandwidth Polymorphic Parallel Memories for DFEsAuthor(s): Catalin Bogdan Ciobanu, Giulio Stramondo, Cees de Laat, Ana Lucia Varbanescu

Published in: 2018 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2018, Page(s) 107-114

DOI: 10.1109/IPDPSW.2018.00025 - Towards Efficient Convolutional Neural Network for Domain-Specific Applications on FPGAAuthor(s): R. Zhao, H.C. Ng, W. Luk and X. Niu

Published in: International Conference on Field-Programmable Logic and Applications, 2018 - HLS Support for Polymorphic Parallel MemoriesAuthor(s): Luca Stornaiuolo, Marco Rabozzi, Marco D. Santambrogio, Donatella Sciuto, Giulio Stramondo, Catalin Bogdan Ciobanu, Ana Lucia Varbanescu

Published in: proceeding of the 26th IFIP/IEEE International Conference on Very Large Scale Integration (VLSI-SoC), 2018 - OXiGen: A Tool for Automatic Acceleration of C Functions Into Dataflow FPGA-Based KernelsAuthor(s): Francesco Peverelli, Marco Rabozzi, Emanuele Del Sozzo, Marco D. Santambrogio

Published in: 2018 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2018, Page(s) 91-98

DOI: 10.1109/IPDPSW.2018.00023 - Accelerated Inference of Positive Selection on Whole GenomesAuthor(s): Nikolaos Alachiotis, Charalampos Vatsolakis, Grigorios Chrysos and Dionisios N. Pnevmatikatos

Published in: International Conference on Field-Programmable Logic and Applications, 2018 - A CAD Open Platform for High Performance Reconfigurable Systems in the EXTRA ProjectAuthor(s): Marco Rabozzi, Rolando Brondolin, Giuseppe Natale, Emanuele Del Sozzo, Michael Huebner, Andreas Brokalakis, Catalin Ciobanu, Dirk Stroobandt, Marco Domenico Santambrogio

Published in: 2017 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), 2017, Page(s) 368-373

DOI: 10.1109/ISVLSI.2017.71 - Liquid: High quality scalable placement for large heterogeneous FPGAsAuthor(s): Dries Vercruyce, Elias Vansteenkiste, Dirk Stroobandt

Published in: 2017 International Conference on Field Programmable Technology (ICFPT), 2017, Page(s) 17-24

DOI: 10.1109/FPT.2017.8280116 - A Scalable FPGA Design for Cloud N-Body SimulationAuthor(s): Emanuele Del Sozzo, Marco Rabozzi, Lorenzo Di Tucci, Donatella Sciuto, Marco D. Santambrogio

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445106 - EXTRA: An Open Platform for Reconfigurable ArchitecturesAuthor(s): Catalin Bogdan Ciobanu, Giulio Stramondo, Ana Lucia Varbanescu, Andreas Brokalakis, Antonis Nikitakis, Lorenzo Di Tucci, Marco Rabozzi, Luca Stornaiuolo, Marco D. Santambrogio, Grigorios Chrysos, Charalampos Vatsolakis, Charitopoulos Georgios, Dionisios Pnevmatikatos

Published in: Embedded Computer Systems: Architectures, Modeling, and Simulation (SAMOS XVIII), 2018 International Conference on, 2018

DOI: 10.1145/3229631.3236092 - A generic high throughput architecture for stream processingAuthor(s): Christes Rousopoulos, Ektoras Karandeinos, Grigorios Chrysos, Apostolos Dollas, Dionisios N. Pnevmatikatos

Published in: 2017 27th International Conference on Field Programmable Logic and Applications (FPL), 2017, Page(s) 1-5

DOI: 10.23919/FPL.2017.8056796 - Five-point algorithm: An efficient cloud-based FPGA implementationAuthor(s): Marco Rabozzi, Emanuele Del Sozzo, Lorenzo Di Tucci, Marco D. Santambrogio

Published in: 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2018, Page(s) 1-8

DOI: 10.1109/ASAP.2018.8445097 - A decoupled access-execute architecture for reconfigurable acceleratorsAuthor(s): George Charitopoulos, Charalampos Vatsolakis, Grigorios Chrysos, Dionisios N. Pnevmatikatos

Published in: Proceedings of the 15th ACM International Conference on Computing Frontiers – CF ’18, 2018, Page(s) 244-247

DOI: 10.1145/3203217.3203267 - Online reconfigurable routing method for handling link failures in NoC-based MPSoCsAuthor(s): Poona Bahrebar, Dirk Stroobandt

Published in: 2016 11th International Symposium on Reconfigurable Communication-centric Systems-on-Chip (ReCoSoC), 2016, Page(s) 1-8

DOI: 10.1109/ReCoSoC.2016.7533905 - Runtime-quality tradeoff in partitioning based multithreaded packingAuthor(s): Dries Vercruyce, Elias Vansteenkiste, Dirk Stroobandt

Published in: 2016 26th International Conference on Field Programmable Logic and Applications (FPL), 2016, Page(s) 1-9

DOI: 10.1109/FPL.2016.7577300 - Efficient Hardware Debugging Using Parameterized FPGA ReconfigurationAuthor(s): Alexandra Kourfali, Dirk Stroobandt

Published in: 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2016, Page(s) 277-282

DOI: 10.1109/IPDPSW.2016.95 - A 16-Bit Reconfigurable Encryption Processor for p-CipherAuthor(s): Mohamed El-Hadedy, Hristina Mihajloska, Danilo Gligoroski, Amit Kulkarni, Dirk Stroobandt, Kevin Skadron

Published in: 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2016, Page(s) 162-171

DOI: 10.1109/IPDPSW.2016.27 - MiCAP: a custom reconfiguration controller for dynamic circuit specializationAuthor(s): Amit Kulkarni, Vipin Kizheppatt, Dirk Stroobandt

Published in: 2015 International Conference on ReConFigurable Computing and FPGAs (ReConFig), 2015, Page(s) 1-6

DOI: 10.1109/ReConFig.2015.7393327 - Hardware Design Automation of Convolutional Neural NetworksAuthor(s): Andrea Solazzo, Emanuele Del Sozzo, Irene De Rose, Matteo De Silvestri, Gianluca C. Durelli, Marco D. Santambrogio

Published in: 2016 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), 2016, Page(s) 224-229

DOI: 10.1109/ISVLSI.2016.101 - Pixie: A heterogeneous Virtual Coarse-Grained Reconfigurable Array for high performance image processing applicationsAuthor(s): A. Kulkarni, A. Werner, F. Fricke, D. Stroobandt and M. Huebner

Published in: 3rd International Workshop on Overlay Architectures for FPGAs (OLAF2017), 2017 - EXTRA: Towards the exploitation of eXascale technology for reconfigurable architecturesAuthor(s): Dirk Stroobandt, Ana Lucia Varbanescu, Catalin Bogdan Ciobanu, Muhammed Al Kadi, Andreas Brokalakis, George Charitopoulos, Tim Todman, Xinyu Niu, Dionisios Pnevmatikatos, Amit Kulkarni, Elias Vansteenkiste, Wayne Luk, Marco D. Santambrogio, Donatella Sciuto, Michael Huebner, Tobias Becker, Georgi Gaydadjiev, Antonis Nikitakis, Alex J. W. Thom

Published in: 2016 11th International Symposium on Reconfigurable Communication-centric Systems-on-Chip (ReCoSoC), 2016, Page(s) 1-7

DOI: 10.1109/ReCoSoC.2016.7533896 - An FPGA-based high-throughput stream join architectureAuthor(s): Charalabos Kritikakis, Grigorios Chrysos, Apostolos Dollas, Dionisios N. Pnevmatikatos

Published in: 2016 26th International Conference on Field Programmable Logic and Applications (FPL), 2016, Page(s) 1-4

DOI: 10.1109/FPL.2016.7577354 - Design and exploration of routing methods for NoC-based multicore systemsAuthor(s): Poona Bahrebar, Dirk Stroobandt

Published in: 2015 International Conference on ReConFigurable Computing and FPGAs (ReConFig), 2015, Page(s) 1-4

DOI: 10.1109/ReConFig.2015.7393296 - EXTRA: Towards an Efficient Open Platform for Reconfigurable High Performance ComputingAuthor(s): Catalin Bogdan Ciobanu, Ana Lucia Varbanescu, Dionisios Pnevmatikatos, George Charitopoulos, Xinyu Niu, Wayne Luk, Marco D. Santambrogio, Donatella Sciuto, Muhammed Al Kadi, Michael Huebner, Tobias Becker, Georgi Gaydadjiev, Andreas Brokalakis, Antonis Nikitakis, Alex J. W. Thom, Elias Vansteenkiste, Dirk Stroobandt

Published in: 2015 IEEE 18th International Conference on Computational Science and Engineering, 2015, Page(s) 339-342

DOI: 10.1109/CSE.2015.54 - A Fully Parameterized Virtual Coarse Grained Reconfigurable Array for High Performance Computing ApplicationsAuthor(s): Amit Kulkarni, Elias Vasteenkiste, Dirk Stroobandt, Andreas Brokalakis, Antonios Nikitakis

Published in: 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2016, Page(s) 265-270

DOI: 10.1109/IPDPSW.2016.13 - A polyhedral model-based framework for dataflow implementation on FPGA devices of iterative stencil loopsAuthor(s): Giuseppe Natale, Giulio Stramondo, Pietro Bressana, Riccardo Cattaneo, Donatella Sciuto, Marco D. Santambrogio

Published in: Proceedings of the 35th International Conference on Computer-Aided Design – ICCAD ’16, 2016, Page(s) 1-8

DOI: 10.1145/2966986.2966995 - Heterogeneous exascale supercomputing: the role of CAD in the exaFPGA projectAuthor(s): M. Rabozzi, G. Natale, E. Del Sozzo, A. Scolari, L. Stornaiuolo, and M. D. Santambrogio

Published in: 2017 Design, Automation Test in Europe Conference Exhibition (DATE), 2017 - On the Automation of High Level Synthesis of Convolutional Neural NetworksAuthor(s): Emanuele Del Sozzo, Andrea Solazzo, Antonio Miele, Marco D. Santambrogio

Published in: 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2016, Page(s) 217-224

DOI: 10.1109/IPDPSW.2016.153 - Towards a Performance-Aware Power Capping Orchestrator for the Xen HypervisorAuthor(s): M. Arnaboldi, M. Ferroni, M. D. Santambrogio

Published in: Embed With Linux (EWiLi) Workshop 2016, 2016 - ProFAX: A hardware acceleration of a protein folding algorithmAuthor(s): Giulia Guidi, Lorenzo Di Tucci, Marco D. Santambrogio

Published in: 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a better tomorrow (RTSI), 2016, Page(s) 1-6

DOI: 10.1109/RTSI.2016.7740584 - Knowledge Transfer in Automatic Optimisation of Reconfigurable DesignsAuthor(s): Maciej Kurek, Marc Peter Deisenroth, Wayne Luk, Timothy Todman

Published in: 2016 IEEE 24th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), 2016, Page(s) 84-87

DOI: 10.1109/FCCM.2016.29 - EURECA compilation: Automatic optimisation of cycle-reconfigurable circuitsAuthor(s): Xinyu Niu, Nicholas Ng, Tomofumi Yuki, Shaojun Wang, Nobuko Yoshida, Wayne Luk

Published in: 2016 26th International Conference on Field Programmable Logic and Applications (FPL), 2016, Page(s) 1-4

DOI: 10.1109/FPL.2016.7577359 - F-CNN: An FPGA-based framework for training Convolutional Neural NetworksAuthor(s): Wenlai Zhao, Haohuan Fu, Wayne Luk, Teng Yu, Shaojun Wang, Bo Feng, Yuchun Ma, Guangwen Yang

Published in: 2016 IEEE 27th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2016, Page(s) 107-114

DOI: 10.1109/ASAP.2016.7760779 - A Domain Specific Language for accelerated Multilevel Monte Carlo simulationsAuthor(s): Ben Lindsey, Matthew Leslie, Wayne Luk

Published in: 2016 IEEE 27th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2016, Page(s) 99-106

DOI: 10.1109/ASAP.2016.7760778 - A Scalable Dataflow Accelerator for Real Time Onboard Hyperspectral Image ClassificationAuthor(s): Shaojun Wang, Xinyu Niu, Ning Ma, Wayne Luk, Philip Leong, Yu Peng

Published in: Part of the Lecture Notes in Computer Science book series (LNCS, volume 9625), 2016, Page(s) 105-116

DOI: 10.1007/978-3-319-30481-6_9 - Connect on the fly: Enhancing and prototyping of cycle-reconfigurable modulesAuthor(s): Hao Zhou, Xinyu Niu, Junqi Yuan, Lingli Wang, Wayne Luk

Published in: 2016 26th International Conference on Field Programmable Logic and Applications (FPL), 2016, Page(s) 1-8

DOI: 10.1109/FPL.2016.7577332 - Optimising Sparse Matrix Vector multiplication for large scale FEM problems on FPGAAuthor(s): Paul Grigoras, Pavel Burovskiy, Wayne Luk, Spencer Sherwin

Published in: 2016 26th International Conference on Field Programmable Logic and Applications (FPL), 2016, Page(s) 1-9

DOI: 10.1109/FPL.2016.7577352 - CASK – Open-Source Custom Architectures for Sparse KernelsAuthor(s): Paul Grigoras, Pavel Burovskiy, Wayne Luk

Published in: Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays – FPGA ’16, 2016, Page(s) 179-184

DOI: 10.1145/2847263.2847338 - A Decoupled Access-Execute Architecture for Reconfigurable AcceleratorsAuthor(s): George Charitopoulos, Charalampos Vatsolakis, Stefanos Sidiropoulos, Grigorios Chrysos and Dionisios Pnevmatikatos

Published in: 11th HiPEAC Workshop on Reconfigurable Computing (WRC’2017), 2017 - Customizable Memory Systems for High Performance Reconfigurable ArchitecturesAuthor(s): Catalin Ciobanu, Giulio Stramondo, Ana Lucia Varbanescu

Published in: International Summer School on Advanced Computer Architecture and Compilation for High-Performance and Embedded (ACACES) 2016, 2016 - A Scalable Dataflow Implementation of Curran’s Approximation AlgorithmAuthor(s): Anna Maria Nestorov, Enrico Reggiani, Marco Domenico Santambrogio, Pavel Burovskiy, Hristina Palikareva and Tobias Becker

Published in: Reconfigurable Architectures Workshop, 2017 - From exaflop to exaflowAuthor(s): • Tobias Becker, Pavel Burovskiy, Anna Maria Nestorov, Hristina Palikareva, Enrico Reggiani, Georgi Gaydadjiev

Published in: Proceedings of the Design Automation and Test in Europe Conference, 2017 - How effective are custom parallel memoriesAuthor(s): Giulio Stramondo, Ana Lucia Varbanescu and Catalin Bogdan Ciobanu

Published in: ICT.Open, 2017 - The Case for Custom Parallel Memories: an Application-centric AnalysisAuthor(s): Giulio Stramondo, Catalin Ciobanu, Ana Lucia Varbanescu

Published in: 2016

Peer reviewed articles (14)

- Abacus turn model-based routing for NoC interconnects with switch or link failuresAuthor(s): Poona Bahrebar, Dirk Stroobandt

Published in: Microprocessors and Microsystems, Issue 59, 2018, Page(s) 69-91, ISSN 0141-9331

DOI: 10.1016/j.micpro.2018.01.005 - Quantum Chemistry in Dataflow: Density-Fitting MP2Author(s): Bridgette Cooper, Stephen Girdlestone, Pavel Burovskiy, Georgi Gaydadjiev, Vitali Averbukh, Peter J. Knowles, Wayne Luk

Published in: Journal of Chemical Theory and Computation, Issue 13/11, 2017, Page(s) 5265-5272, ISSN 1549-9618

DOI: 10.1021/acs.jctc.7b00649 - How Preserving Circuit Design Hierarchy During FPGA Packing Leads to Better PerformanceAuthor(s): Dries Vercruyce, Elias Vansteenkiste, Dirk Stroobandt

Published in: IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Issue 37/3, 2018, Page(s) 629-642, ISSN 0278-0070

DOI: 10.1109/TCAD.2017.2717786 - FP-BNN: Binarized neural network on FPGAAuthor(s): Shuang Liang, Shouyi Yin, Leibo Liu, Wayne Luk, Shaojun Wei

Published in: Neurocomputing, Issue 275, 2018, Page(s) 1072-1086, ISSN 0925-2312

DOI: 10.1016/j.neucom.2017.09.046 - Run-time Reconfigurable Acceleration for Genetic Programming Fitness Evaluation in Trading StrategiesAuthor(s): Andreea-Ingrid Funie, Paul Grigoras, Pavel Burovskiy, Wayne Luk, Mark Salmon

Published in: Journal of Signal Processing Systems, Issue 90/1, 2018, Page(s) 39-52, ISSN 1939-8018

DOI: 10.1007/s11265-017-1244-8 - How to Efficiently Reconfigure Tunable Lookup Tables for Dynamic Circuit SpecializationAuthor(s): Amit Kulkarni, Dirk Stroobandt

Published in: International Journal of Reconfigurable Computing, Issue 2016, 2016, Page(s) 1-12, ISSN 1687-7195

DOI: 10.1155/2016/5340318 - Floorplanning Automation for Partial-Reconfigurable FPGAs via Feasible Placements GenerationAuthor(s): Marco Rabozzi, Gianluca Carlo Durelli, Antonio Miele, John Lillis, Marco Domenico Santambrogio

Published in: IEEE Transactions on Very Large Scale Integration (VLSI) Systems, Issue 25/1, 2017, Page(s) 151-164, ISSN 1063-8210

DOI: 10.1109/TVLSI.2016.2562361 - MiCAP-Pro: a high speed custom reconfiguration controller for Dynamic Circuit SpecializationAuthor(s): Amit Kulkarni, Dirk Stroobandt

Published in: Design Automation for Embedded Systems, Issue 20/4, 2016, Page(s) 341-359, ISSN 0929-5585

DOI: 10.1007/s10617-016-9180-6 - On How to Accelerate Iterative Stencil LoopsAuthor(s): Riccardo Cattaneo, Giuseppe Natale, Carlo Sicignano, Donatella Sciuto, Marco Domenico Santambrogio

Published in: ACM Transactions on Architecture and Code Optimization, Issue 12/4, 2016, Page(s) 1-26, ISSN 1544-3566

DOI: 10.1145/2842615 - Performance-driven instrumentation and mapping strategies using the LARA aspect-oriented programming approachAuthor(s): João M. P. Cardoso, José G. F. Coutinho, Tiago Carvalho, Pedro C. Diniz, Zlatko Petrov, Wayne Luk, Fernando Gonçalves

Published in: Software: Practice and Experience, Issue 46/2, 2016, Page(s) 251-287, ISSN 0038-0644

DOI: 10.1002/spe.2301 - Leveraging FPGAs for Accelerating Short Read AlignmentAuthor(s): James Arram, Thomas Kaplan, Wayne Luk, Peiyong Jiang

Published in: IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2017, Page(s) 1-1, ISSN 1545-5963

DOI: 10.1109/TCBB.2016.2535385 - A Domain Specific Approach to High Performance Heterogeneous ComputingAuthor(s): Gordon Inggs, David B. Thomas, Wayne Luk

Published in: IEEE Transactions on Parallel and Distributed Systems, Issue 28/1, 2017, Page(s) 2-15, ISSN 1045-9219

DOI: 10.1109/TPDS.2016.2563427 - NeuroFlow: A General Purpose Spiking Neural Network Simulation Platform using Customizable ProcessorsAuthor(s): Kit Cheung, Simon R. Schultz, Wayne Luk

Published in: Frontiers in Neuroscience, Issue 9, 2016, ISSN 1662-453X

DOI: 10.3389/fnins.2015.00516 - Transparent In-Circuit Assertions for FPGAsAuthor(s): Eddie Hung, Tim Todman, Wayne Luk

Published in: IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2017, Page(s) 1-1, ISSN 0278-0070

DOI: 10.1109/TCAD.2016.2618862

Book chapters (1)

- Chapter Four – Data Flow Computing in Geoscience ApplicationsAuthor(s): L. Gan, H. Fu, O. Mencer, W. Luk, G. Yang

Published in: 2017, Page(s) 125-158

DOI: 10.1016/bs.adcom.2016.09.005

- UNIVERSITEIT GENT – Belgium

- Telecommunication Systems Institute – Greece

- IMPERIAL COLLEGE OF SCIENCE TECHNOLOGY AND MEDICINE – United Kingdom

- POLITECNICO DI MILANO – Italy

- UNIVERSITEIT VAN AMSTERDAM – Netherlands

- RUHR-UNIVERSITAET BOCHUM – Germany

- MAXELER TECHNOLOGIES LIMITED – United Kingdom

- SYNELIXIS LYSEIS PLIROFORIKIS AUTOMATISMOU & TILEPIKOINONION ANONIMI ETAIRIA – Greece

- THE CHANCELLOR MASTERS AND SCHOLARS OF THE UNIVERSITY OF CAMBRIDGE – United Kingdom

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

COSSIM

A Novel, Comprehensible, Ultra-Fast, Security-Aware CPS Simulator

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: COSSIM

- Πρόγραμμα: Horizon 2020 (H2020-EU.2.1.1.1.)

- Προϋπολογισμός: 294514.04€ (Συνολικός: 2882030 €)

- Ημερομηνία Έναρξης: 1η Φεβρουαρίου 2015

- Διάρκεια: 36 μήνες

- Website(s): cossim.org, CORDIS

Περισσότερες Πληροφορίες

One of the main problems the CPS designers face is “the lack of simulation tools and models for system design and analysis”. This is mainly because the majority of the existing simulation tools for complex CPS handle efficiently only parts of a system while they mainly focus on the performance. Moreover, they require extreme amounts of processing resources and computation time to accurately simulate the CPS nodes’ processing. Faster approaches are available, however as they function at high levels of abstraction, they cannot provide the accuracy required to model the exact behavior of the system under design so as to guarantee that it meets the requirements in terms of performance and/or energy consumption.

The COSSIM project will address all those needs by providing an open-source framework which will

- seamlessly simulate, in an integrated way, both the networking and the processing parts of the CPS,

- perform the simulations orders of magnitude faster,

- provide much more accurate results especially in terms of power consumption than existing solutions,

- report more CPS aspects than any existing tool including the underlying security of the CPS.

COSSIM will achieve the above by developing a novel simulator framework based on a processing simulation sub-system (i.e. a “full-system simulator”) which will be integrated with a novel network simulator. Furthermore, innovative power consumption and security measurement models will be developed and incorporated to the end framework. On top of that, COSSIM will also address another critical aspect of an accurate CPS simulation environment: the performance as measured in required simulation time. COSSIM will create a framework that is orders of magnitude faster, while also being more accurate and reporting more CPS aspects, than existing solutions, by applying hardware acceleration through the use of field programmable gate arrays (FPGAs), which have been proven extremely efficient in relevant tasks.

Publications

Book chapters

- COSSIM : A Novel, Comprehensible, Ultra-Fast, Security-Aware CPS Simulator

Author(s): Ioannis Papaefstathiou, Gregory Chrysos, Lambros Sarakis

Published in: Applied Reconfigurable Computing, 2015, Page(s) 542-553

DOI: 10.1007/978-3-319-16214-0_50

Peer reviewed articles

- Toothbrush motion analysis to help children learn proper tooth brushing

Author(s): Marco Marcon, Augusto Sarti, Stefano Tubaro

Published in: Computer Vision and Image Understanding, Issue 148, 2016, Page(s) 34-45, ISSN 1077-3142

DOI: 10.1016/j.cviu.2016.03.009

- SYNELIXIS LYSEIS PLIROFORIKIS AUTOMATISMOU & TILEPIKOINONION ANONIMI ETAIRIA – Greece

- STMICROELECTRONICS SRL – Italy

- MAXELER TECHNOLOGIES LIMITED – United Kingdom

- FUNDACION TECNALIA RESEARCH & INNOVATION – Spain

- SEARCH-LAB BIZTONSAGI ERTEKELO ELEMZO ES KUTATO LABORATORIUM KORLATOLTFELELOSSEGU TARSASAG – Hungary

- CHALMERS TEKNISKA HOEGSKOLA AB – Sweden

- POLITECNICO DI MILANO – Italy

- Telecommunication Systems Institute – Greece

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

QualiMaster

A configurable real-time data processing infrastructure mastering autonomous quality adaptation

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή ΕΕ.Ε.

- Κωδικός Έργου: QualiMaster

- Πρόγραμμα: SEVENTH FRAMEWORK PROGRAMME (FP7-ICT)

- Προϋπολογισμός: 691938€ (Συνολικό: 3745066 €)

- Ημερομηνία Έναρξης: 1st Ιανουαρίου 2014

- Διάρκεια: 36 μήνες

- Website(s): qualimaster.eu, CORDIS

Πληροφορίες

The growing number of fine-granular data streams opens up new opportunities for improved risk analysis, situation and evolution monitoring as well as event detection. However, there are still some major roadblocks for leveraging the full potential of data stream processing, as it would, for example, be needed the highly relevant systemic risk analysis in the financial domain.

The QualiMaster project will address those road blocks by developing novel approaches for autonomously dealing with load and need changes in large-scale data stream processing, while opportunistically exploiting the available resources for increasing analysis depth whenever possible. For this purpose, the QualiMaster infrastructure will enable autonomous proactive, reflective and cross-pipeline adaptation, in addition to the more traditional reactive adaptation.

Starting from configurable stream processing pipelines, adaptation will be based on quality-aware component description, pipelines optimization and the systematic exploitation of families of approximate algorithms with different quality/performance tradeoffs. However, adaptation will not be restricted to the software level alone: We will go a level further by investigating the systematic translation of stream processing algorithms into code for reconfigurable hardware and the synergistic exploitation of such hardware-based processing in adaptive high performance large-scale data processing.

The project focuses on financial analysis based on combining financial data streams and social web data, especially for systemic risk analysis. Our user-driven approach involves two SMEs from the financial sector. Rigorous evaluation with real world data loads from the financial domain enriched with relevant social Web content will further stress the applicability of QualiMaster results.

- A Collection of Software Engineering Challenges for Big Data System Development

Author(s): Andreas Giloj, Klaus Schmid, Holger Eichelberger, Dominik Werle, Oliver Hummel

Published in: IEEE SEAA 2018

Permanent ID: Digital Object Identifier:10.1109/seaa.2018.00066 - Query Analytics over Probabilistic Databases with Unmerged Duplicates

Author(s): Ekaterini Ioannou, Minos Garofalakis

Published in: Institute of Electrical and Electronics Engineers (IEEE) 2015

Permanent ID: Digital Object Identifier:10.1109/tkde.2015.2405507 - Extracting Large Social Networks Using Search Engines

Author(s): Stefan Siersdorfer, Sergej Zerr, Philipp Kemkes, Hanno Ackermann

Permanent ID: Digital Object Identifier:10.1145/2806416.2806582,arXiv:1701.08285 - Extracting Large Social Networks using Internet Archive Data

Author(s): Miroslav Shaltev, Sergej Zerr, Philipp Kemkes, Stefan Siersdorfer, Jan-Hendrik Zab

Permanent ID: Digital Object Identifier:10.1145/2911451.2911467,arXiv:1701.03277

- GOTTFRIED WILHELM LEIBNIZ UNIVERSITAET HANNOVER – Germany

- STIFTUNG UNIVERSITAT HILDESHEIM – Germany

- Telecommunication Systems Institute – Greece

- MAXELER TECHNOLOGIES LIMITED – United Kingdom

Άλλα Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

SpeDial

Spoken Dialogue Analytics

- Χρηματοδότηση: Ευρωπαϊκή Επιτροπή Ε.Ε.

- Κωδικός Έργου: SpeDial

- Πρόγραμμα: SEVENTH FRAMEWORK PROGRAMME (FP7-ICT)

- Προϋπολογισμός: 110240€ (Overall: 1958400 €)

- Ημερομηνία Έναρξης: 1st December 2013

- Διάρκεια: 24 months

- Website(s): CORDIS

Πληροφορίες

The speech services industry has been growing both for telephony applications and, recently, also for smartphones (e.g., Siri). Despite recent progress in spoken dialogue system (SDS) technologies the development cycle of speech services still requires significant effort and expertise. A significant portion of this effort is geared towards the development of the domain semantics and associated grammars, system prompts and spoken dialogue call-flow.

We propose a semi-automated process for spoken dialogue service development and speech service enhancement of deployed services, where incoming speech service data are semi-automatically transcribed and analyzed (human-in-the-loop).

A list of mature technologies will be used to

- identify hot-spots in the dialogue and propose alternative call-flow structures,

- mine for relevant data to enhance grammars and

- mine for relevant data to update service prompts.

Specifically the technologies used will be: grammar induction, text-mining for language modeling, affective modeling of speech and text data, machine translation, crowd-sourcing, speech recognition/transcription, ontology induction. The technologies will be integrated in a service doctoring platform that will enhance deployed services using the human-in-the-loop paradigm.

Our business model is quick deployment of a prototype service, followed by service enhancement using our semi-automated service doctoring platform. The reduced development time and time-to-market will provide significant differentiation for SME in the speech services areas, as well as end-users. The business opportunity is significant especially given the consolidation of the speech services industry and the lack of major competition. Our offering is attractive for SME in the services area with little expertise in speech service development (B2B) and also end-user that are developing their own in house speech service often with limited success (B2C).

SpeDial is built around the knowledge cascade of technologies, data and services. Automatic or machineaided algorithms will be used to analyze the data logs from deployed speech services, and, in turn, these data will be used to tune in a cost-effective manner the speech service using the set of algorithms and tools of the SpeDial platform.

The main S&T goal of SpeDial is to devise machine-aided methods for spoken dialogue system enhancement and customization for call-center applications. SpeDial adopts a user-centric approach to SDS design. Rather than simply rolling out algorithms from the research lab to the real world (being hopeful about their usefulness), we have tried to map the requirements of a speech services developer and emulate the logical flow being followed.

In this process, we have identified two scenarios: service enhancement where the developer starts from an existing application and tries to improve KPI performance and user satisfaction, and service customization where the developer addresses the special needs of a user population. Thus our second goal is to create a platform that supports cost-effective service doctoring for those two scenarios: enhancement and customization. The platform also include interfaces for service and user satisfaction monitoring (IVR analytics component).

Our third goal is to create and support a sustainable pool of developers that will be trained to use the platform. Two separate groups of users are targeted: non-commercial users including the research community and speech services developers at end-user companies. All in all, SpeDial has ambitious but realistic goals both for technological and commercial exploitation of project outputs.

- Prosodic Classification of Discourse Markers

Author(s): Cabarrão, Vera,Moniz, Helena,Ferreira, Jaime,Batista, Fernando,Trancoso, Isabel,Ana Isabel Mata,Curto, Sérgio

Permanent ID: Handle:10451/31083 - Cross-language transfer of semantic annotation via targeted crowdsourcing: task design and evaluation

Author(s): Evgeny A. Stepanov,Shammur Absar Chowdhury,Ioannis Klasinas,Marcos Calvo,Ali Orkan Bayer,Giuseppe Riccardi,Emilio Sanchis,Arindam Ghosh

Published in: Springer Science and Business Media LLC 2017

Permanent ID: Digital Object Identifier:10.1007/s10579-017-9396-5 - Fusion of Knowledge-Based and Data-Driven Approaches to Grammar Induction

Author(s): Georgiladakis, Spiros,Unger, Christina,Iosif, Elias,Walter, Sebastian,Cimiano, Philipp,Petrakis, Euripides,Potamianos, Alexandros

Permanent ID: urn:urn:nbn:de:0070-pub-27638625 - Combining multiple approaches to predict the degree of nativeness

Author(s): Ribeiro, E.,Ferreira, J.,Olcoz, J.,Abad, A.,Helena Moniz,Batista, F.,Trancoso, I.

Permanent ID: Handle:10451/31072 - Mapping the Dialog Act Annotations of the LEGO Corpus into the Communicative Functions of ISO 24617-2

Author(s): Ribeiro, Eugénio,Ribeiro, Ricardo,de Matos, David Martins

Permanent ID: arXiv:1612.01404 - The Influence of Context on Dialogue Act Recognition

Author(s): Ribeiro, Eugénio,Ribeiro, Ricardo,de Matos, David Martins

Permanent ID: arXiv:1506.00839 - Improving Speech Recognition through Automatic Selection of Age Group – Specific Acoustic Models

Author(s): Miguel Sales Dias,Annika Hämäläinen,Michael Tjalve,Hugo Meinedo,Thomas Pellegrini,Isabel Trancoso

Published in: Springer International Conference on Computational Processing of Portuguese – PROPOR 2014 2014

Permanent ID: Digital Object Identifier:10.1007/978-3-319-09761-9_2,Handle:10071/15133 - Disfluency Detection Across Domains

Author(s): Moniz, Helena,Ferreira, Jaime,Batista, Fernando,Trancoso, Isabel

Published in: International Phonetic Association Array 2015

Permanent ID: Handle:10451/31081 - Classificação prosódica de marcadores discursivos

Author(s): Sérgio Curto,Vera Cabarrão,Fernando Batista,Isabel Trancoso,Jaime Ferreira,Helena Moniz,Ana Isabel Mata

Published in: Associação Portuguesa de Linguística 2016

Permanent ID: Handle:10451/31069,Digital Object Identifier:10.21747/2183-9077/rapl2a4,Handle:10071/12800,Digital Object Identifier:10.21747/2183-9077/rapl2 - Automatic Recognition of Prosodic Patterns in Semantic Verbal Fluency Tests – an Animal Naming Task for Edutainment Applications

Author(s): Moniz, Helena,Pompili, Anna,Batista, Fernando,Trancoso, Isabel,Abad, Alberto,Amorim, Cristiana

Published in: International Phonetic Association Array 2015

Permanent ID: Handle:10451/31073 - Extending AuToBI to prominence detection in European Portuguese

Author(s): Andrew Rosenberg,Helena Moniz,Fernando Batista,Isabel Trancoso,Ana Isabel Mata,Julia Hirschberg

Permanent ID: Handle:10451/31086,Digital Object Identifier:10.21437/speechprosody.2014-43

- NU ECHO INC SOCIETE PAR ACTIONS – Canada

- VOICE WEB ANONYMOS ETAIRIA PAROCHIS PLIROFORION KAI ANAPTYXIS FONITIKON TECHNOLOGION – Greece

- Telecommunication Systems Institute – Greece

- INESC ID – INSTITUTO DE ENGENHARIADE SISTEMAS E COMPUTADORES, INVESTIGACAO E DESENVOLVIMENTO EM LISBOA – Portugal

- KUNGLIGA TEKNISKA HOEGSKOLAN – Sweden

Σχετικά Έργα

VARCITIES

ePower

BLEeper

WMatch

AMPERE

EDRA

Partensor

ECOSCALE

EXTRA

COSSIM

QualiMaster

SpeDial

FI-STAR

LEADS

HERMES

ΣΧΕΤΙΚΟΙ ΣΥΝΔΕΣΜΟΙ

Τελευταία Νέα

- Έγκριση Πρακτικού Επιτροπής Αξιολόγησης για τη σύναψη μίας σύμβασης μίσθωσης έργου ιδιωτικού δικαίου, στα πλαίσια του έργου με ακρωνύμιο“ REBECCA – No 101097224”, κωδικός Ε.Π.Ι.Τ.Σ. 60047.28 Φεβρουαρίου, 2023 - 9:50 πμ

- Πρόσκληση εκδήλωσης ενδιαφέροντος για υποβολή προτάσεων, ΑΠ.415/60047.2 Φεβρουαρίου, 2023 - 11:13 πμ

Έναρξη του προγράμματος IntellIoT, ένα ευρωπαϊκό ερευνητικό έργο προϋπολογισμού €8εκ. με αντικείμενο τη Επόμενη Γενιά συστημάτων IoT13 Δεκεμβρίου, 2020 - 12:49 μμ

Έναρξη του προγράμματος IntellIoT, ένα ευρωπαϊκό ερευνητικό έργο προϋπολογισμού €8εκ. με αντικείμενο τη Επόμενη Γενιά συστημάτων IoT13 Δεκεμβρίου, 2020 - 12:49 μμ

ΔΙΕΥΘΥΝΣΗ

Ερευνητικό Πανεπιστημιακό Ινστιτούτο Τηλεπικοινωνιακών Συστημάτων – ΕΠΙΤΣ

Πολυτεχνείο Κρήτης

Πολυτεχνειούπολη – Κουνουπιδιανά

Τ.Κ. : 73100, Χανιά – Κρήτη